MuSFA- Multi-Modal Sensory Data Fusion Framework for Autonomous Vehicles Using Advanced AI Techniques

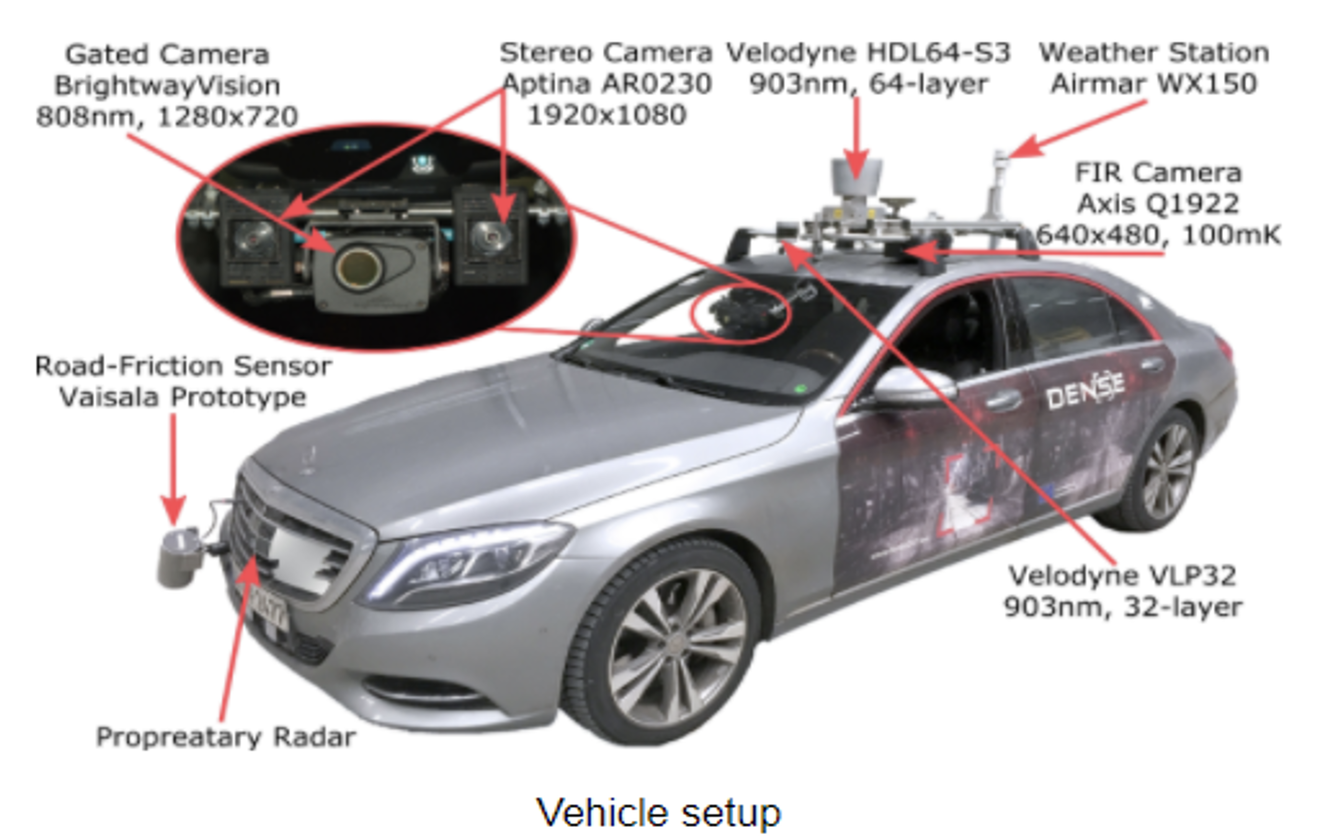

Autonomous driving has emerged as a predominant domain over the past few years. Sensor and communication technologies are the crucial enablers of autonomous driving. Advancement in these technologies has added to the recent pace of autonomous driving evolution. Implementation of ITU-T 2020’s usage scenarios including ultra-reliable low latency communication and enhanced mobile broadband allow the real-time information exchange between and amongst the involved entities e.g., vehicles, road infrastructure, etc. Autonomous vehicles can sense its environment and take appropriate instant decisions to react to the environmental events. Self-driving cars combines variety of intelligent sensors to perceive the surrounding such as cameras, radars, LIDARs, sonars, GPS, odometers, and inertial measurements units. The perception systems must be accurate in giving precise understanding of environment. They must be robust enough to work properly in adverse weather conditions and even when some sensors are degraded and defective. The sensor systems must be dynamic and efficient to capture environment data and the data related to the characteristics of the autonomous vehicles. Since different device capture information in different formats, the data exhibits multimodal characteristic.

In autonomous driving, instant decision-making for critical maneuvers such as lane changing, platooning activities, rerouting, braking, and overtaking, etc., is a vital task to ensure traffic safety, for which appropriate pre-processing and organizing of vehicular data is essential. Vehicular data for autonomous driving is collected from different sources such as on-vehicle sensors, other vehicles, backends of the on-road deployed sensors, etc. These sources exhibit multimodal characteristics. Preprocessing the raw multimodal sensor data, followed by fusing them into a unique format to simplify the processing steps for instant decision-making in autonomous driving is still a challenging issue. Moreover, if the data captured by the sensors of the autonomous vehicles are not clear, the vehicles fail to frame exact decision rules reflecting the system environment leading to fatal accidents. Hence, effective pre-processing followed by fusion of the multimodal data into a unique format, which facilitates the rule framing mechanism for effective decision making is an imperative task for autonomous driving

In this project, we develop new data pre-processing techniques, different multimodal fusion strategies, and efficient decision-making policies which enable reliable and safe autonomous driving. The proposed framework aims to enhance traffic safety, reduce accident rates and help autonomous vehicles take instant decisions in response to un-forecasted events. Pertaining to this proposal, we have conducted some preliminary research to analyze vehicular data, create datasets, frame policies and provide security in vehicular environment.

Contributors:

- Hesham El-Sayed (Principal Investigator, CIT),

- Manzoor Khan (Co-PI, CIT),

- Salah Bouktif Co-PI, CIT), Henry Alexander (Ph.D. Student)

Do you find this content helpful?

عفوا

لايوجد محتوى عربي لهذه الصفحة

عفوا

يوجد مشكلة في الصفحة التي تحاول الوصول إليها